In a recent project, we were tasked with designing how we would replace a

Mainframe system with a cloud native application, building a roadmap and a

business case to secure funding for the multi-year modernisation effort

required. We were wary of the risks and potential pitfalls of a Big Design

Up Front, so we advised our client to work on a ‘just enough, and just in

time’ upfront design, with engineering during the first phase. Our client

liked our approach and selected us as their partner.

The system was built for a UK-based client’s Data Platform and

customer-facing products. This was a very complex and challenging task given

the size of the Mainframe, which had been built over 40 years, with a

variety of technologies that have significantly changed since they were

first released.

Our approach is based on incrementally moving capabilities from the

mainframe to the cloud, allowing a gradual legacy displacement rather than a

“Big Bang” cutover. In order to do this we needed to identify places in the

mainframe design where we could create seams: places where we can insert new

behavior with the smallest possible changes to the mainframe’s code. We can

then use these seams to create duplicate capabilities on the cloud, dual run

them with the mainframe to verify their behavior, and then retire the

mainframe capability.

Thoughtworks were involved for the first year of the programme, after which we handed over our work to our client

to take it forward. In that timeframe, we did not put our work into production, nevertheless, we trialled multiple

approaches that can help you get started more quickly and ease your own Mainframe modernisation journeys. This

article provides an overview of the context in which we worked, and outlines the approach we followed for

incrementally moving capabilities off the Mainframe.

Contextual Background

The Mainframe hosted a diverse range of

services crucial to the client’s business operations. Our programme

specifically focused on the data platform designed for insights on Consumers

in UK&I (United Kingdom & Ireland). This particular subsystem on the

Mainframe comprised approximately 7 million lines of code, developed over a

span of 40 years. It provided roughly ~50% of the capabilities of the UK&I

estate, but accounted for ~80% of MIPS (Million instructions per second)

from a runtime perspective. The system was significantly complex, the

complexity was further exacerbated by domain responsibilities and concerns

spread across multiple layers of the legacy environment.

Several reasons drove the client’s decision to transition away from the

Mainframe environment, these are the following:

- Changes to the system were slow and expensive. The business therefore had

challenges keeping pace with the rapidly evolving market, preventing

innovation. - Operational costs associated with running the Mainframe system were high;

the client faced a commercial risk with an imminent price increase from a core

software vendor. - Whilst our client had the necessary skill sets for running the Mainframe,

it had proven to be hard to find new professionals with expertise in this tech

stack, as the pool of skilled engineers in this domain is limited. Furthermore,

the job market does not offer as many opportunities for Mainframes, thus people

are not incentivised to learn how to develop and operate them.

High-level view of Consumer Subsystem

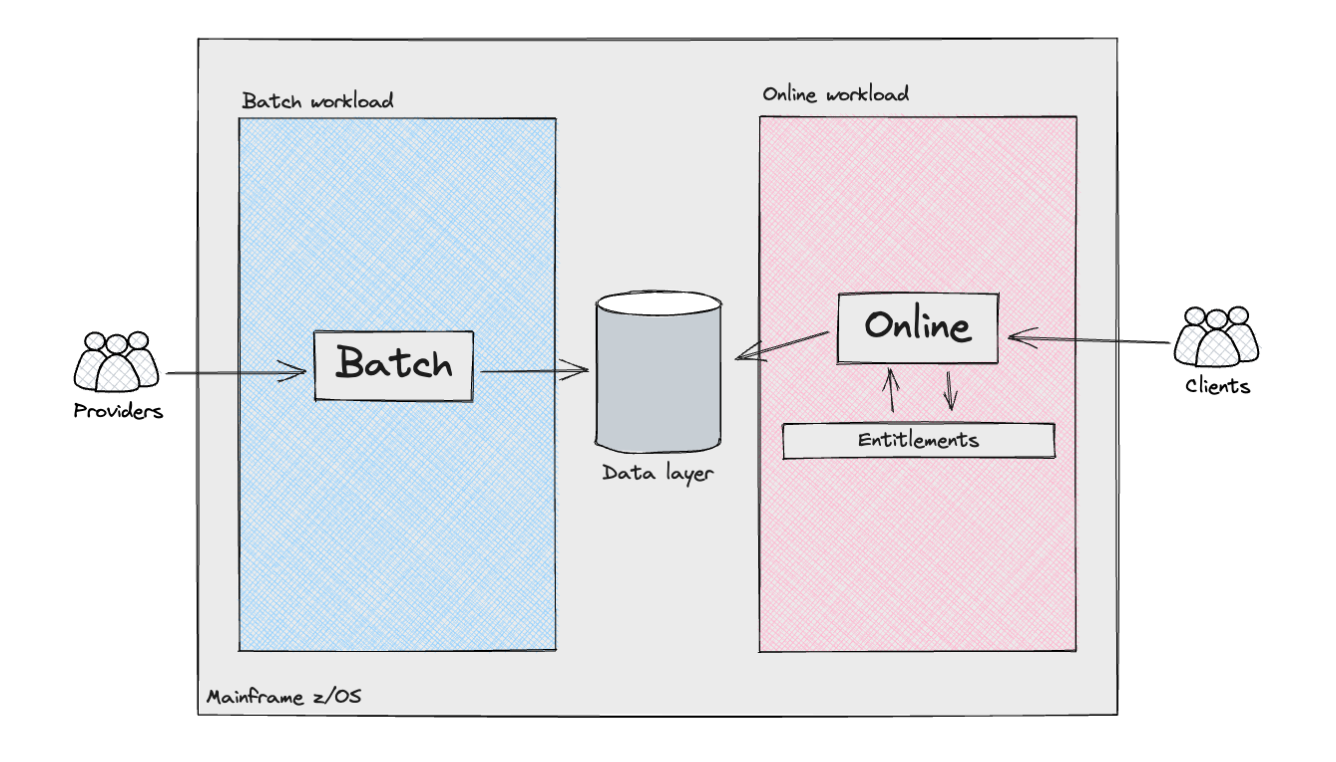

The following diagram shows, from a high-level perspective, the various

components and actors in the Consumer subsystem.

The Mainframe supported two distinct types of workloads: batch

processing and, for the product API layers, online transactions. The batch

workloads resembled what is commonly referred to as a data pipeline. They

involved the ingestion of semi-structured data from external

providers/sources, or other internal Mainframe systems, followed by data

cleansing and modelling to align with the requirements of the Consumer

Subsystem. These pipelines incorporated various complexities, including

the implementation of the Identity searching logic: in the United Kingdom,

unlike the United States with its social security number, there is no

universally unique identifier for residents. Consequently, companies

operating in the UK&I must employ customised algorithms to accurately

determine the individual identities associated with that data.

The online workload also presented significant complexities. The

orchestration of API requests was managed by multiple internally developed

frameworks, which determined the program execution flow by lookups in

datastores, alongside handling conditional branches by analysing the

output of the code. We should not overlook the level of customisation this

framework applied for each customer. For example, some flows were

orchestrated with ad-hoc configuration, catering for implementation

details or specific needs of the systems interacting with our client’s

online products. These configurations were exceptional at first, but they

likely became the norm over time, as our client augmented their online

offerings.

This was implemented through an Entitlements engine which operated

across layers to ensure that customers accessing products and underlying

data were authenticated and authorised to retrieve either raw or

aggregated data, which would then be exposed to them through an API

response.

Incremental Legacy Displacement: Principles, Benefits, and

Considerations

Considering the scope, risks, and complexity of the Consumer Subsystem,

we believed the following principles would be tightly linked with us

succeeding with the programme:

- Early Risk Reduction: With engineering starting from the

beginning, the implementation of a “Fail-Fast” approach would help us

identify potential pitfalls and uncertainties early, thus preventing

delays from a programme delivery standpoint. These were: - Outcome Parity: The client emphasised the importance of

upholding outcome parity between the existing legacy system and the

new system (It is important to note that this concept differs from

Feature Parity). In the client’s Legacy system, various

attributes were generated for each consumer, and given the strict

industry regulations, maintaining continuity was essential to ensure

contractual compliance. We needed to proactively identify

discrepancies in data early on, promptly address or explain them, and

establish trust and confidence with both our client and their

respective customers at an early stage. - Cross-functional requirements: The Mainframe is a highly

performant machine, and there were uncertainties that a solution on

the Cloud would satisfy the Cross-functional requirements. - Deliver Value Early: Collaboration with the client would

ensure we could identify a subset of the most critical Business

Capabilities we could deliver early, ensuring we could break the system

apart into smaller increments. These represented thin-slices of the

overall system. Our goal was to build upon these slices iteratively and

frequently, helping us accelerate our overall learning in the domain.

Furthermore, working through a thin-slice helps reduce the cognitive

load required from the team, thus preventing analysis paralysis and

ensuring value would be consistently delivered. To achieve this, a

platform built around the Mainframe that provides better control over

clients’ migration strategies plays a vital role. Using patterns such as

Dark Launching and Canary

Release would place us in the driver’s seat for a smooth

transition to the Cloud. Our goal was to achieve a silent migration

process, where customers would seamlessly transition between systems

without any noticeable impact. This could only be possible through

comprehensive comparison testing and continuous monitoring of outputs

from both systems.

With the above principles and requirements in mind, we opted for an

Incremental Legacy Displacement approach in conjunction with Dual

Run. Effectively, for each slice of the system we were rebuilding on the

Cloud, we were planning to feed both the new and as-is system with the

same inputs and run them in parallel. This allows us to extract both

systems’ outputs and check if they are the same, or at least within an

acceptable tolerance. In this context, we defined Incremental Dual

Run as: using a Transitional

Architecture to support slice-by-slice displacement of capability

away from a legacy environment, thereby enabling target and as-is systems

to run temporarily in parallel and deliver value.

We decided to adopt this architectural pattern to strike a balance

between delivering value, discovering and managing risks early on,

ensuring outcome parity, and maintaining a smooth transition for our

client throughout the duration of the programme.

Incremental Legacy Displacement approach

To accomplish the offloading of capabilities to our target

architecture, the team worked closely with Mainframe SMEs (Subject Matter

Experts) and our client’s engineers. This collaboration facilitated a

just enough understanding of the current as-is landscape, in terms of both

technical and business capabilities; it helped us design a Transitional

Architecture to connect the existing Mainframe to the Cloud-based system,

the latter being developed by other delivery workstreams in the

programme.

Our approach began with the decomposition of the

Consumer subsystem into specific business and technical domains, including

data load, data retrieval & aggregation, and the product layer

accessible through external-facing APIs.

Because of our client’s business

purpose, we recognised early that we could exploit a major technical boundary to organise our programme. The

client’s workload was largely analytical, processing mostly external data

to produce insight which was sold on to clients. We therefore saw an

opportunity to split our transformation programme in two parts, one around

data curation, the other around data serving and product use cases using

data interactions as a seam. This was the first high level seam identified.

Following that, we then needed to further break down the programme into

smaller increments.

On the data curation side, we identified that the data sets were

managed largely independently of each other; that is, while there were

upstream and downstream dependencies, there was no entanglement of the datasets during curation, i.e.

ingested data sets had a one to one mapping to their input files.

.

We then collaborated closely with SMEs to identify the seams

within the technical implementation (laid out below) to plan how we could

deliver a cloud migration for any given data set, eventually to the level

where they could be delivered in any order (Database Writers Processing Pipeline Seam, Coarse Seam: Batch Pipeline Step Handoff as Seam,

and Most Granular: Data Characteristic

Seam). As long as up- and downstream dependencies could exchange data

from the new cloud system, these workloads could be modernised

independently of each other.

On the serving and product side, we found that any given product used

80% of the capabilities and data sets that our client had created. We

needed to find a different approach. After investigation of the way access

was sold to customers, we found that we could take a “customer segment”

approach to deliver the work incrementally. This entailed finding an

initial subset of customers who had purchased a smaller percentage of the

capabilities and data, reducing the scope and time needed to deliver the

first increment. Subsequent increments would build on top of prior work,

enabling further customer segments to be cut over from the as-is to the

target architecture. This required using a different set of seams and

transitional architecture, which we discuss in Database Readers and Downstream processing as a Seam.

Effectively, we ran a thorough analysis of the components that, from a

business perspective, functioned as a cohesive whole but were built as

distinct elements that could be migrated independently to the Cloud and

laid this out as a programme of sequenced increments.