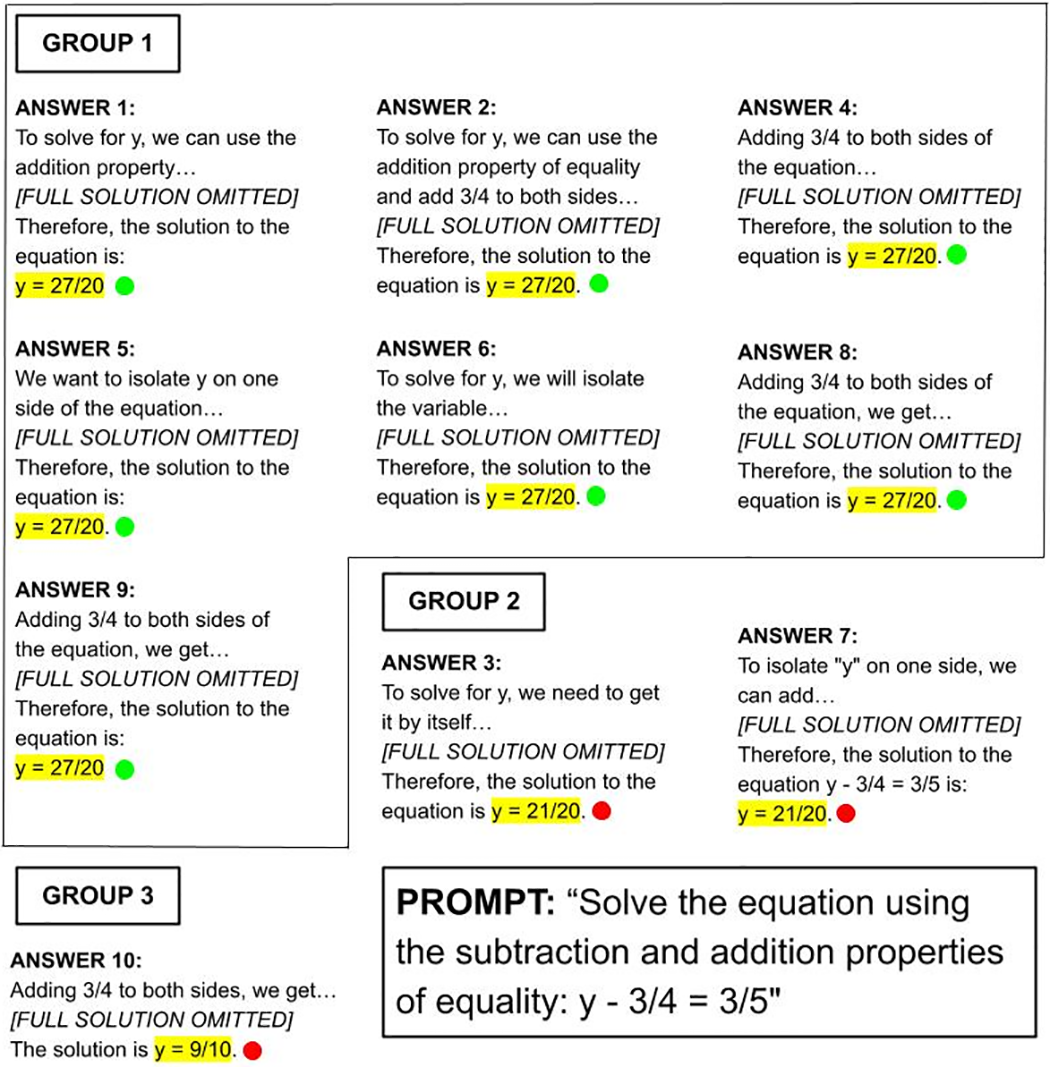

The Berkeley researchers took advantage of the fact that ChatGPT, like humans, is erratic. They asked ChatGPT to answer the same math problem 10 times in a row. I was surprised that a machine might answer the same question differently, but that is what these large language models do. Often the step-by-step process and the answer were the same, but the exact wording differed. Sometimes the methods were bizarre and the results were dead wrong. (See an example in the illustration below.)

Researchers grouped similar answers together. When they assessed the accuracy of the most common answer among the 10 solutions, ChatGPT was astonishingly good. For basic high-school algebra, AI’s error rate fell from 25% to zero. For intermediate algebra, the error rate fell from 47% to 2%. For college algebra, it fell from 27% to 2%.

ChatGPT answered the same algebra question three different ways, but it landed on the right response seven out of 10 times in this example

However, when the scientists applied this method, which they call “self-consistency,” to statistics, it did not work as well. ChatGPT’s error rate fell from 29% to 13%, but still more than one out of 10 answers was wrong. I think that’s too many errors for students who are learning math.

The big question, of course, is whether these ChatGPT’s solutions help students learn math better than traditional teaching. In a second part of this study, researchers recruited 274 adults online to solve math problems and randomly assigned a third of them to see these ChatGPT’s solutions as a “hint” if they needed one. (ChatGPT’s wrong answers were removed first.) On a short test afterwards, these adults improved 17% compared to less than 12% learning gains for the adults who could see a different group of hints written by undergraduate math tutors. Those who weren’t offered any hints scored about the same on a post-test as they did on a pre-test.

Those impressive learning results for ChatGPT prompted the study authors to boldly predict that “completely autonomous generation” of an effective computerized tutoring system is “around the corner.” In theory, ChatGPT could instantly digest a book chapter or a video lecture and then immediately turn around and tutor a student on it.

Before I embrace that optimism, I’d like to see how much real students – not just adults recruited online – use these automated tutoring systems. Even in this study, where adults were paid to do math problems, 120 of the roughly 400 participants didn’t complete the work and so their results had to be thrown out. For many kids, and especially students who are struggling in a subject, learning from a computer just isn’t engaging.

This story about AI hallucinations was written by Jill Barshay and produced by The Hechinger Report, a nonprofit, independent news organization focused on inequality and innovation in education. Sign up for Proof Points and other Hechinger newsletters.